On March 6,Jin Seo Alibaba released and open-sourced its new reasoning model, QwQ-32B, featuring 32 billion parameters. Despite being significantly smaller than DeepSeek-R1, which has 6,710 billion parameters (with 3.7 billion active), QwQ-32B matches its performance in various benchmarks. QwQ-32B excelled in math and coding tests, outperforming OpenAI’s o1-mini and distilled versions of DeepSeek-R1. It also scored higher than DeepSeek-R1 in some evaluations like LiveBench and IFEval. The model leverages reinforcement learning and integrates agent capabilities for critical thinking and adaptive reasoning. Notably, QwQ-32B requires much less computational power, making it deployable on consumer-grade hardware. This release aligns with Alibaba’s AI strategy, which includes significant investments in cloud and AI infrastructure. Following the release, Alibaba’s US stock rose 8.61% to $141.03, with Hong Kong shares up over 7%.[Jiemian, in Chinese]

Related Articles

2025-06-27 01:58

1445 views

Touring Logitech's Audio HQ

In searching for the perfect wireless gaming headset, I recently expressed my overall dissatisfactio

Read More

2025-06-27 00:23

2905 views

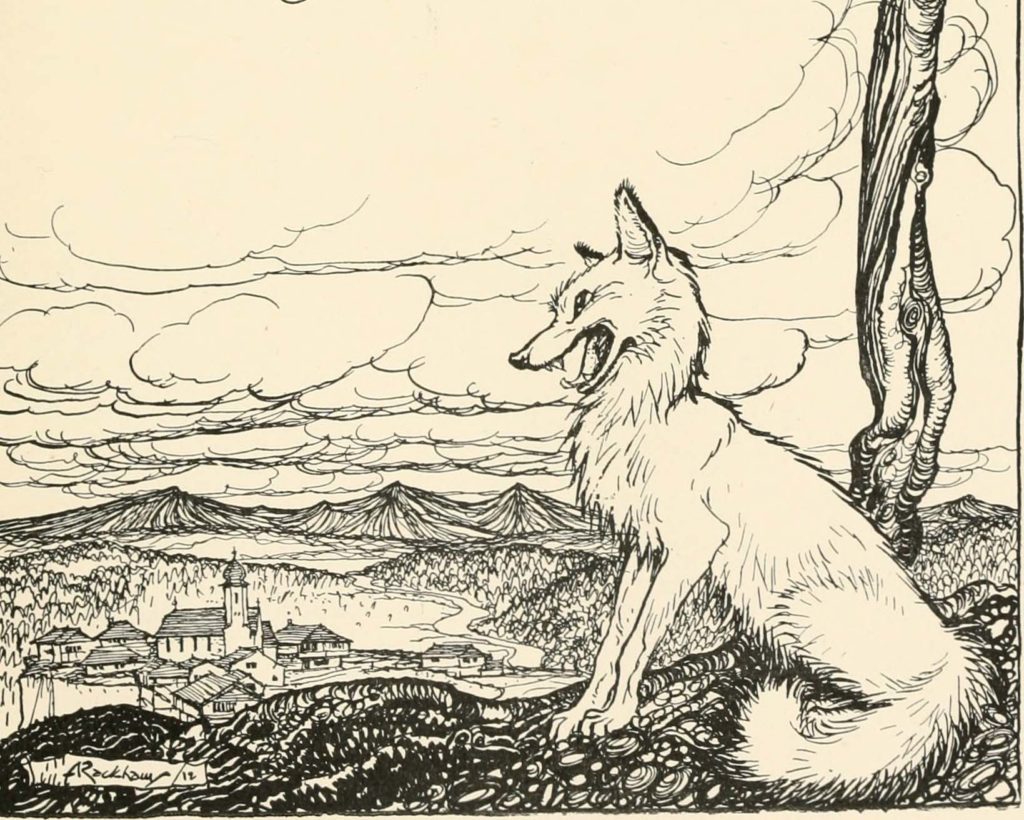

All the Better to Hear You With by Sabrina Orah Mark

All the Better to Hear You WithBy Sabrina Orah MarkSeptember 8, 2020HappilySabrina Orah Mark’s colum

Read More

2025-06-26 23:56

2165 views

Editing Justice Ginsburg by David Ebershoff

Editing Justice GinsburgBy David EbershoffSeptember 22, 2020Arts & CultureAn editor recalls the

Read More